20 years of microfrontends

Posted | Reading time: 39 minutes and 16 seconds.

Contents

It’s difficult to tell exactly when I created my first website. I remember it all started with .de.vu domains for me, as they were free. And I did manage to get a complimentary 1 Megabyte of static webspace at some webhoster. I went to high school and didn’t want to spend the little money I had on a virtual calling card. The code I wrote back then, I did in c++. And that didn’t work well for websites.

A lesson from ancient history

Anyways, I didn’t get the fuzz about websites. It was the year 199-something, and you could either connect to the internet or do a telephone call, not both simultaneously. The thing I needed a connection for were newsgroups, email and FTP. When a friend showed me Altavista, a significant search engine back then, I wouldn’t even know what to search for. But obviously, others had created pointless websites. So I had to, too.

And rather quickly, I ran into issues.

I tried to create websites with multiple pages right from the start. There was a home.html, about.html, links.html. I copied the header, navigation, and footer to every single page to be consistent.

And then I learned I could drop them all into folders and call the page index.html, and people would be able to open the pages without putting a .html at the end.

So I moved the files into folders. And then I had to change all links to all pages, in every single file. “That’s not as should be”, I thought to myself and started to read some documentation. And the first important lesson I learned was how to do frontend composition via framesets.

Framesets

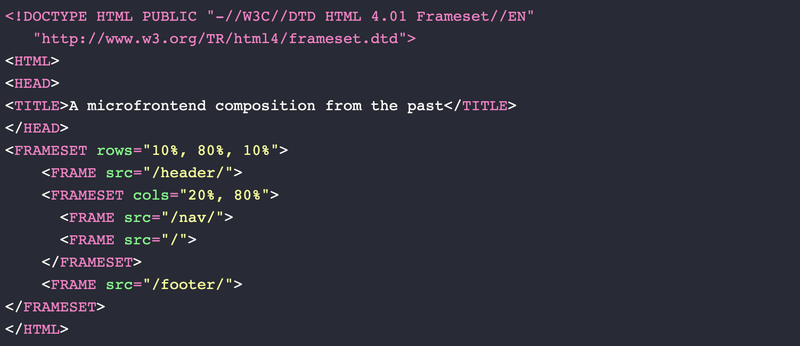

After redesigning my webpage to a frameset, the page looked somewhat like the following:

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Frameset//EN"

"http://www.w3.org/TR/html4/frameset.dtd">

<HTML>

<HEAD>

<TITLE>A simple frameset document</TITLE>

</HEAD>

<FRAMESET rows="10%, 80%, 10%">

<FRAME src="/header/">

<FRAMESET cols="20%, 80%">

<FRAME src="/nav/">

<FRAME src="/">

</FRAMESET>

<FRAME src="/footer/">

</FRAMESET>

</HTML>

that might create a frame layout something like this:

---------------------------------------

| |

| header/ |

| |

---------------------------------------

| | |

| | |

| | |

| Nav/ | Content |

| | |

| | |

| | |

| | |

| | |

---------------------------------------

| |

| Footer/ |

| |

---------------------------------------

And rendered in a browser, it would roughly resemble the following:

If you click on one of the navigation links, you can see that the content part’s headline changes after a flicker. That is because a full other page is loaded inside this frame. If I want to change the navigation, only one page (the nav/index.html) has to be changed, and the changes would appear for all visitors.

This setup, having a principal page managing the look and feel of the resulting homepage, has influenced my vocabulary until today. And from here on, we will refer to this master page as frame.

You were also able to communicate between the frames, which allowed you to send events between pages. Take an e-commerce website with a shopping cart deployed to a site /shoppingcart/. It’s embedded on your ‘98 frameset HTML 4 website in a frame with the name frame_of_the_shopping_cart. On your product page /product/id, you would have a button “add to shopping cart”, which would be in a form with a target for the other frame, and voila, you would add products to the shopping cart. Different teams could develop those frame sites independently and release them without talking to other teams. Teams were not set up that way back in those days, but if they would, via frames, they could have worked pretty independently on their products. In code, that would look something like this:

<!-- /index.html -->

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Frameset//EN"

"http://www.w3.org/TR/html4/frameset.dtd">

<HTML>

<HEAD>

<TITLE>A simple frameset document</TITLE>

</HEAD>

<FRAMESET rows="10%, 90%">

<FRAME name="frame_of_the_shopping_cart" src="/shopping-cart/">

<FRAME src="/product/id">

</FRAMESET>

</HTML>

<!-- /product/index.html -->

<form action="/shoppingcart/add_to_cart" method="PUT" target="frame_of_the_shopping_cart">

<input type="hidden" name="productId" value="123">

<input type="submit" value="Add to shopping cart">

</form>

With forms and frames, you could create a dataflow model that would trigger specific parts of the page while others remain unchanged. Following a few ideas, you could build easily maintainable web applications that were pretty fast over the slow connections that were common back then:

- Keep the data sent between the frames to a minimum. For instance, post the product id and not the entire product. Passing only ids avoids that your product detail page has to know your shopping cart needs and keeps contracts stable when adding long client caching to the single frame pages.

- Have small, independent blocks for the functionality on your homepage following user actions. Small fragments allow you to have teams that can work on specific parts and apply changes everywhere at once without aligning with others, with the main slice being single pages shown in a frame. Back then, that meant that the guestbook, the pictures and the articles were served from independent services and composed together in the browser for the user.

There were, however, quite a few downsides to framesets. For instance, for your website to work like that, you have to allow arbitrary websites to POST and GET to your endpoints. An attacker could add your pages to their frameset, and they would be able to execute commands in the security context of your website.

Also, while you can create framesets with relative sizes, it was almost impossible to create a responsive layout. No breakpoints existed in the specification for framesets, and 20% can be very little space on small displays.

And finally, it wasn’t easy to optimise framesets for search engines.

Due to their shortcomings, the support for frames and framesets ended with HTML 5; the only thing that remains are iframes, and I had to migrate my website’s code eventually to its second version.

iFrames

Until today iFrames are in use in numerous websites. As each of the iFrames has its window, it is easy to encapsulate styles, separate javascript, and perform tasks that should not impact the rest of the website. It also means that every page has to download all stylesheets, even if they are the same, increasing the total payload. And it makes it hard to optimise for search.

Let’s take a look at an example. As before, we want a shopping cart, and multiple pages to search and display products. We can easily set this up with a static index.html which is served from every route not under parts:

<body>

<header><iframe name="shopping-cart" src="/parts/shopping-cart"></iframe></header>

<nav><!-- shared navigation --></nav>

<iframe name="container" id="micro-frontend-container"></iframe>

<script type="text/javascript">

const {search, pathname} = window.location;

const microFrontendsByRoute = {

'/': '/parts/search' + search,

'/product': '/parts/product' + search

};

document.getElementById('micro-frontend-container')

.src = microFrontendsByRoute[pathname];

</script>

</body>

If the page https://example.com/ is opened, then the iFrame loads /parts/search; if /product is opened, then /parts/product is loaded. We can navigate, but how can we add a product to the shopping cart?

For that, we can use messages to communicate in the browser. First, we need something that acts as a bus in the container.

const allowedSenders = [

window.frames["content"].contentWindow,

window.frames["shopping-cart"].contentWindow,

];

window.addEventListener("message", (evt) => {

if (allowedSenders.some(sender => sender === evt.source) && evt.origin === "https://example.com") {

const {target} = evt.data;

window.frames[target].contentWindow.postMessage(evt.data)

} else {

console.log("not trusted")

}

}, false);

This will redirect all messages sent from an iFrame to their parent container, and forward it to the other frames. So next we can setup the communication:

// /parts/product

window.parent.postMessage({ body: { productId: "123" }, action: "add", target: "shopping-cart" })

// /parts/shopping-cart

window.addEventListener("message", (evt) => {

if (window.parent === evt.source

&& evt.origin === "https://example.com"

&& evt.data?.target === "shopping-cart") {

const {action, body} = evt.data

if (action === "add") {

fetch("/api/shopping-cart", { method: 'PUT' }, body: JSON.stringify(body))

// do more, like display the number of items in the cart

}

}

})

Looking at the flow, we can see how we managed to set up a very lightweight communication between the different microfrontends.

products container shopping-cart

+ + +

| | |

| trigger | |

+--------------> |

| postMsg | |

| | |

| | trigger |

| +--------------->

| | postMsg |

| | +--------+

| | | add |

| | | to |

| | | cart |

| | <--------+

| | |

| | |

| | |

That wasn’t too bad. But iframes came at their costs. They are very isolated, which can sharing make difficult. It usually means you have to make sure you load the same stylesheets, maybe the same javascript frameworks, perhaps even some pictures in every frame. Caching can help there, but if you have a no-cache directive or links that are hard to cache, it will lead to a lot of data that has to be transferred.

They are in a different window; thus, the security context can be different.

Things like responsiveness and SEO can become cumbersome.

You might just come to the point where it gets out of hand, and you need to do something different. It was then when I turned to the server.

The dawn of the monolithic backend

The first Server Side Includes

When a teenager was turning to server-side programming in the ’90s of the last century, eventually they’d get in contact with scripting languages like PHP or ASP, maybe a little bit of JSP in school. It was effortless to get started with something, and there were plenty of free webspaces. Independent on what you chose, you had an easy way to include and compose different page fragments - server-side includes.

The idea is simple enough - a statement on the page would include other files on the same server.

# index.php

<?php include 'header.html';?>

<?php include 'body.html';?>

<?php include 'footer.html';?>

' index.asp

<!-- #include file="header.html" -->

<!-- #include virtual="/lib/body.html" -->

<!-- #include file="footer.html" -->

<%@ include file="header.html" %>

<%@ include file="body.html" %>

<%@ include file="footer.html" %>

All the code had to be in the same directory (or at least the same server and symlinked together). But it was the monoliths’ time, standalone fat applications deployed as a single package, and that wasn’t an issue. Every page request would result in a complete refresh, and thus, for a short period, adding something to a shopping cart was something that didn’t involve any frontend.

During that time, my work with web applications turned more professional. It involved more application servers, whereas the frontend gets statically rendered by the View of the Model View Controller, the outermost representation of our information architecture. Life was easy, data flow was well defined, and we scaled up our servers to a supercomputer to make sure the application would have excellent response times.

The software development profession matured a lot back then; ideas like Domain Driven Development helped decompose the application. Test-Driven Development (or at least Test-Heavy Development) became the norm. Event-driven approaches and the rise of functional paradigms in OO languages like c#, scala and later Kotlin made the programs more robust.

But having a multitude of teams working on a single monolithic application introduced deploy- and runtime dependencies that got harder and more difficult to manage.

Then, Microservices came along; and the ways of the monolith became obsolete. And with Microservices came the term of microfrontends.

Interlude - Defining Microfrontends

I have talked about microfrontends from the start and never really defined what I’m talking about when using the term. You might have guessed a direction based on the examples I have given. For this article’s sake, we want to use the definition that microfrontends are client applications that can be developed and delivered independently and are composed on a page during runtime and as late as possible.

Following this definition, the header and footer might not be a microfrontend (as they are not an application), but the shopping cart most certainly is. Setting your teams (and applications) up this way is easier said than done. If working on a monolithic codebase, the teams might be coupled in their tests, deployments (how often and when they can deploy code to production), database migrations, framework dependency updates and so on.

files

Team Search +------> repo +-----------> /search

elasticsearch

files

Team Products +------> repo +-----------> /product/$id

database

files

Team Checkout +------> repo +-----------> /shopping-cart

/checkout

/orders

files

Team Payments +------> repo +-----------> /payment

database

Those independent teams create an entire product that has to play together nicely. A lot of those separations can be split up by different routes on the website. Others are not so easy. The most prominent example is the shopping cart, and this is the one I’m focusing on the most. That doesn’t mean we won’t look at the others, but as the shopping cart is something special (visible on most pages, holds state), it’s a great example.

We will use the above’s teams as a blueprint on the applications we create and how we would solve them.

Server Side Includes in Microfrontends

We talked about Server Side Includes earlier. Back then, we faced some constraints: We had to reference static files on the same server, limiting our ability to compose pages from multiple sources. That wasn’t an issue because, anyways, the monolith application served the website. As we split up the monolith and deployed it on numerous machines, people started to extend their edge gateways to consume URLs rather than files, load them and, on the edge gateway, render the HTML into the page.

In a nutshell: The main difference between the classical SSI and the one we have here is we are now loading fragments from an URL. An example of how teams can achieve this are Varnish’ edge side includes. With this, you can create your pages from different fragments relatively quickly and deploy them independently.

<html>

<head>

<title>My Website - Product Detail</title>

<esi:include src="http://example.com/fragment/shared_styles" />

</head>

<body>

<header>

<esi:include src="http://checkout.example.com/fragment/navigation" />

<esi:include src="http://checkout.example.com/fragment/shopping_cart" />

</header>

<main>

<esi:include src="http://product.example.com/fragment/product_page" />

</main>

</body>

</html>

Using server-side includes like this, however, comes with tradeoffs. Managing your shared dependencies and styles can become complicated; there is a lot of responsibility in the teams to make sure they don’t break others. Managing contracts between the fragments are complex. You can solve this with more technology, but the costs of ownership will stay high. And the difficulty of understanding the impact of a single change in your fragment will profoundly hinder the teams’ autonomy. Finally, having this setup will require you to have all components work locally and inject your development version will need additional work. As the application grows, it will be harder to manage a good developer setup.

And there are cases where SSI will not work at all due to the environment.

Modularisation in the frontend

Server Side Includes work only if you have a unique edge gateway to compose the content you need. This setup is not always the case, and it does impose new deploy-time dependencies between the teams for the edge gateway. There has to be a team to maintain the gateway and allow teams to manage the frame that acts as the composition. This artefact defines the SLA for your overall application, and one misfired deployment in your edge gateway will break everything.

How much easier were our lives when a frameset did this in the browser, wasn’t it?

There has been a particular part of the web that never used anything else but the browser for that exact scenario - ads.

Ads

Ads permanently were restricted by certain constraints: They are outside the website’s network that delivers their content, they had to be fast in loading and must not modify the style of the rest of the page, nor should they be affected by settings there. There was the need to provide fallbacks if the ad’s data wasn’t available and that the website hosting the ad is not affected by outages of the ad network. Over time, companies tested multiple approaches for ad-integration.

The easiest one we have already seen - integration via iframe. Their strong scoping makes sure styles won’t leak in either direction, and a breaking ad-server will not affect the entire page. However, you will see an error page between the rest of the content, and the size of the iframe restricts the ad. Over time, the approaches changed to a different method: widgets.

Widgets

The first time I became aware of widgets was around the year 2010, in jquery-ui. The idea was to create a single modular component and then be able to apply them to arbitrary elements. Let’s retake our shopping cart: We want the shopping cart visible on every page, with a label showing the cart’s number of pieces.

Using jQuery, we can create a widget like that:

// https://shopping-cart.example.com/js/index.js

$(function() {

$.widget("mywebsite.shoppingcart", {

_create: function() {

shoppingCartService.loadCountOfElementsInCart()

.then((count) => {this.count = count; this._refresh()})

this.cart = $('<div>🛒</div>').appendTo(this.element)

this.counter = $("<div>").appendTo(this.element)

this._refresh()

},

_refresh: function () {

if (this.count) {

this.counter.text(this.count)

}

}

})

$(".shopping-cart").shoppingcart()

})

Now on every page we add the script, every element with the class shopping-cart will be replaced by a cart with a counter:

<script src="https://shopping-cart.example.com/js/index.js"></script>

<div class="shopping-cart"></div>

If the javascript is not available or has an error, the element would not be loaded and collapse without any other impact on the page. The widget can also expose functions that allow you to trigger specific methods from the outside or pass additional options. A more comprehensive example is shown here:

Creating modules via javascript can even be extended. A good example is Twitter, which allows you to add a feed as plain HTML first. The plain content will then get enhanced by a javascript function, for instance, adding styles. Having the static text will ensure that an outage will not affect the page’s content; visitors with javascript disabled can see the content nevertheless.

#Software #madeInGermany never had the same flavour as #cars created in Germany. Software actually has more of a smell. One that's leaking into the other products, like cars. But what can we do to change that? I have three ideas. #cto #business #Qualityhttps://t.co/uejaEzHxYW pic.twitter.com/uhg9fjlOhS

— MatKainer (@MatKainer) February 21, 2021

What works great for small modules get tedious if you try to roll out something like that in an organisation with hundreds of teams for each of their frontends. There is no clear style encapsulation; maintaining a shared state is complicated, and passing events between widgets very difficult.

Besides, the frontend took a journey very similar to what we saw in the backends years before - it was the dawn of the monolithic frontend frameworks.

Frameworks like Angular and ReactJS make it easy to create monolithic single-page applications, and while the backend services got smaller and more decomposed, the browser applications got huge.

Framework components

It’s not as if the frameworks didn’t support modularisation. However, for most of them, it’s easiest to create one folder and structure all the files in there. In those files, you will have independent components but coupled by the application they are embedded in.

Let’s take our shopping cart into a react application.

const Cart = ({ count }) => {

return (

<div className="shopping-cart">

<div>🛒</div>

<div>{count}</div>

</div>

);

};

The count of the shopping cart comes from a property, so from the outside. This allows the creation of stateless, reusable components. The button to add the product is in another stateless component, and everything is composed in the outer application component, the frame, that manages the state.

const App = () => {

const [count, setCount] = useState(0);

useEffect(() => {

shoppingCartService.loadCountOfElementsInCart().then(setCount);

}, []);

return (

<div>

<Cart count={count} />

<DetailPage

onItemAddedToCart={() =>

shoppingCartService

.addItemToCart()

.then(shoppingCartService.loadCountOfElementsInCart)

.then(setCount)

}

/>

</div>

);

};

The advantage of this is that the components themselves are dumb, and all the logic and the state is in one place. As everything is very explicit, the code is easier to understand, and changes are simpler to follow than the jQuery example. See the migrated project here:

The disadvantage comes when the project grows. In a setup with multiple teams sharing one application, this single point of state in the frame imposes a changeability limitation. Every change to the app requires coordination between all teams, and the company will need some governance to manage this.

More so, as all of this is one project, building and testing the project will get slower, and other teams will block deployments. All the things we overcame in the backend when moving away from monoliths are now coming back at us.

So how to split?

Setting up your projects

Monorepo approach

The first and most straightforward approach is to split up your dependencies into packages managed in a workspace. Yarn, a package manager for Node.js, comes with this feature.

Rather than decomposing your frontend into different applications (like you would in the backend with microservices), you create smaller packages and add them as a dependency into the main application. Thus, Workspaces equip you with the capability to have independent modules that the teams can work on independently.

Creating a workspace is easy. Add a package.json in the root of your application, and add the frame application into the my-website folder. The modules you want to share put into the package directory and create package.json files for them.

.

├── my-website

│ └── package.json

├── package.json

└── packages

├── detail-page

│ └── package.json

└── shopping-cart

└── package.json

In the root package.json, you set up the workspace like this:

{

"private": true,

"workspaces": ["my-website", "packages/*"]

}

If you yarn install the shopping cart in the my-website package, it will reference the package on your drive directly, and every change to the cart will be visible on the frame application immediately.

Also, you will not be required to run all tests for all packages on every change that you commit, and adding additional tooling on top of it like lerna can also add other functionality to improve the developer experience.

You still have a strong dependency on the frame. Yarn added a lot of hidden complexity for the workspace, which will take a lot of mental load on the developers and add higher operation costs whenever something is broken.

Interlude: Dynamic Frontends

As your application grows, in addition to the everyday complexities of integration and deployment, another struggle will come up. As you go, for instance, international, the requirements for the components will change drastically. And it might not always be beneficial to solve this in the frontend.

An example of this is the people’s address format. The formatting can and will look different for different countries. You might be tempted to use your workspace to create various components for each country and use the currently active for the given market.

In my experience, that creates many issues; a weak spot of this approach is that your API will have to provide additional data for different countries. If you go that route, you will have to maintain a combinatory behemoth for various frontend components matching specific backend APIs.

As displaying the data in the front end is defined by the data the backend will need, I usually prefer to turn it around and have a general component that can render a specific form based on a definition provided by the backend. At the beginning of my career, developers often did this with Extensible Stylesheet Language Transformations to transform XML into another representation. This method is also available for JSON data and allows you to maintain flexibility and maintain your definition of how to render data in one place. Better yet, create a composition to manage the complexity in the backend and let the frontend generate a dynamic view based on the data that was provided by the backend.

The last idea, to have this in the backend, is fundamental since it will enable you to change a single artefact to modify the behaviour globally without any interaction/dependency with anything else, nor other teams nor your own frontend.

How does that look?

Let’s assume that you have an address object that is more or less capable for international addresses, for instance like this:

internal data class Address(

val honorific: String = "",

val firstName: String = "",

val lastName: String = "",

val address1: String = "",

val address2: String = "",

val `do`: String = "",

val si: String = "",

val dong: String = "",

val gu: String = "",

val number: String = "",

val zipCode: String = "",

val province: String = "",

val city: String = "",

val country: String = "",

)

Based on a template in your Backend, you can now create a DSL that returns you the required field based on the language, something like this:

internal val internationalFormats: InternationalFormats.() -> Unit = {

`de` {

`DE` {

listOf(

Row(person, listOf("honorific", "firstName", "lastName")),

Row(person, listOf("address1", "address2", "number")),

Row(person, listOf("zipCode", "city")),

Row(preson, listOf("country"))

)

}

}

}

For each row, you can now generate a field that looks like this:

{

controlType: 'textbox',

key: 'firstName',

value: 'First Name',

required: true

}

In the frontend, you just have to create a dynamic form based on that that can be extended easily. As the configuration is in the backend and can be read without understanding the code, it’s easy to extend and deploy.

Build-time dependencies

As your organisation grows, so will the demand for deployment independence of the teams and smaller packages. At one point, using a Monorepo approach with a distributed version control system like git will no longer be supported by the teams, and they will try to get smaller repositories containing only the things relevant for them.

A relatively simple approach to make sure the dependencies are still managed is something we know from the backend world: artifactories that act as a provider for shared artefacts between teams.

A commit triggered will start a build that will upload a new patch version of the artefact. If the deployment succeeds, the build will trigger the frame project and thus a deployment there. This methodology is much more lightweight than before, as each team can focus on their artefacts with little effort, and builds can be rapid.

Note: Companies can achieve these benefits with more technology in a Monorepo as well, but the required hidden complexity is, in my opinion, higher than with a distributed setup.

Again, some drawbacks apply as well. There is still the frame’s management, although you can reduce it by allowing it to automatically update patch/minor version if all tests are green. If the tests fail, the red test will block deployment for everybody, though.

With distributed projects, the management of shared dependencies can be complex. How to track which dependency is using which version of the base framework? How to update shared dependencies?

And even more difficult - complex dependency trees. Think about our shopping cart button. The team providing it is probably the checkout team, sharing it with the product team. The checkout icon in the menu they shared with the Navigation team. And the frame is embedding the checkout pages.

If they schedule a major update, all versions in all dependencies have to be switched to the newest version to avoid having multiple versions on the website at the same time.

This problem is even worse if there are multiple frames in the organisation segregated around portfolios or products. As long as the teams work with build time integration, they will struggle to have a consistent single version deployed simultaneously.

Interlude: Sharing Component Patterns

We only talked about integration issues soo far, not about the right size for components to share. An excellent approach for right-sizing your elements is Atomic Design by Brad Frost.

Following his approach, I had had great experiences splitting up considerably large applications in the following way:

Atoms are a collection that a central design system provides and shares with other parts of the organisation.

The central team either provides molecules or teams create their own. Let’s take a search form as an example. There can be an Omnibox with only one textbox or a specialised search with multiple boxes like first name, last name, telephone number. The central team can provide the first, while the latter probably comes from the team delivering the search functionality.

When shaping your components, the critical thing to consider is that the search field will not return the results but only the query. Sharing as few business entities as possible is following the lessons we already learned with frames.

The search in the header can then pass the query to the list organism that shows all results. Communicating the search query can be done with standards like URL query parameters.

┌─Page────────────────────────────────────────────────┐

│ Template "Search Result" │

│ ┌─Header─────────────────────┬┬──────┬──────────┬─┐ │

│ │ ├┘search└──────────┤ │ │

│ │ │ ├─┼─┼─────────┐

│ │ ├──────────────────┤ │ │ 1. │

│ └────────────────────────────┴──────────────────┴─┘ │ query │

│ │ = │

2. │ ┌─Result─List─────────────────────────────────────┐ │ "search"│

POST Backend│ │ ◄─┼─┼─────────┘

┌──────────────┼─┤ ┌───────────┐ ┌────────────┐ ┌────────────┐ │ │

│ │ │ │ │ │ │ │ │ │ │

│ │ │ │ │ │ │ │ │ │ │

▼ │ │ │ │ │ │ │ │ │ │

│ │ └───────────┘ └────────────┘ └────────────┘ │ │

│ │ │ │

│ │ │ ┌───────────┐ ┌────────────┐ │ │

│ │ │ │ │ │ │ │ │

└──────────────┼─► │ │ │ │ │ │

pass result │ │ │ │ │ │ │ │

to frontend │ │ └───────────┘ └────────────┘ │ │

│ │ │ │

│ └─────────────────────────────────────────────────┘ │

│ │

└─────────────────────────────────────────────────────┘

In this example, as the product team, we will be sharing the search molecule with the navigation team. By listening to our event that provides the query, we know what we need to search. A service will connect the result list to the backend to receive the matching items from our data store and display the results.

The product team can change the list independently of every other team and adjust the backend APIs without waiting for a new navigation release.

As long as we have build time integrations, we didn’t gain much as the dependency composition still needs a shared deployment.

Runtime dependencies

We continue to be constrained by the fact that our dependency management is tightly coupled to the frame releases. Compared with backend services that integrate via HTTP endpoints provided from microservices released independently, the reality we have achieved soo far is highly dissatisfactory.

Tools and frameworks have popped up to help us, but most importantly, new standards start to evolve. Those standards aim to allow for a stronger modularisation and help with dependency management. Unfortunately, a few of them are still in the draft phase, and we need frameworks to polyfill the functionality for them.

One of the strongest in that area at the moment is single-spa.

Single-spa is a framework that allows you to register applications and load them during runtime from their deployed location using import maps, and start only the one you are working on locally, unlike the build-time approaches in which you will always have to have everything locally.

In addition, it helps you manage dependencies and even allows running multiple frameworks in parallel (although you should be careful before doing so). I created an example repository on github to give you a chance to see a react+vue project running in parallel.

First, it requires you to wrap your application inside a lifecycle management container; there are helper libraries for most frameworks, and with our shopping cart with react, this would look the following:

import {rootComponent} from './shoppingCart';

const lifecycles = singleSpaReact({

React,

ReactDOM,

rootComponent

})

Now you can create a systemjs module from it and publish it to your CDN. In your frame, you can now reference the location in the import map and register the application in Single-spa.

<script type="systemjs-importmap">

{

"imports": {

"react": "https://cdn.jsdelivr.net/npm/react@16.12.0/umd/react.production.min.js",

"react-dom": "https://cdn.jsdelivr.net/npm/react-dom@16.12.0/umd/react-dom.production.min.js",

"single-spa": "https://cdn.jsdelivr.net/npm/single-spa@4.4.1/lib/system/single-spa.min.js",

"@my-website/shopping-cart": "https://cdn.example.com/shopping-cart/js/index.js"

}

}

</script>

<script>

System.import("single-spa").then(function(singleSpa) {

singleSpa.registerApplication({

name: "@my-website/shopping-cart",

app: () => System.import("@my-website/shopping-cart"),

activeWhen: () => true,

customProps: {}

});

singleSpa.start();

});

</script>

As you can see, we loaded react globally as well. That means we are not required to ship it with the shopping cart. Once we have multiple dependencies, this will decrease the payload for each of those packages and make the management of shared dependencies more manageable.

Interlude: State Management

As we split up components more and more, state management becomes difficult. As teams are distributed, and communication between them in the real world will become less, managing their components and their shared state will require more effort. In the end, all those atoms, molecules and organisms, are working together on a single product.

As the teams were given more freedom, the state of your application will be more distributed. As a result, handling global state or debugging errors will get difficult.

Let’s take a typical checkout funnel as an example. As our application grew international, management decided to scale to multiple teams. There’s a profile team taking care to collect the address. Team payment manages the payment methods, and team delivery takes care of shipment options. Team order management will then take the data that was collected during the process and assign it to an order that can be dispatched.

In different countries, there might be slightly different processes. In some, you might want to start with payment and take the data you get from them to fill out the profile. In others, you start with the profile as you need the federal state to understand which payment options are available. How can we define such a process without introducing a team managing the rules and being destined to become a bottleneck team for any change in the process?

What is the best way to enable order management to collect all the data once the checkout process is complete?

In the backend, this type of communication is often handled via an event-based storage system like Kafka. It allows dependent systems to watch for specific events and trigger commands in case conditions are fulfilled.

In the frontend, we don’t have infrastructure artefacts that allow us to pass on those events based on topics. So frameworks evolved to help you with those things. One of the most common nowadays is redux, which allows you to manage our state globally.

I prototyped a similar library, organismus, to achieve something similar but with a vocabulary closer to the organism from atom design. The idea is that an organism needs specific stimuli triggered by hormones to show certain reactions.

When hormones are released globally, receptors will be triggered locally and be able to act on the stimulus. Take a look at the simple counter-example below:

const countingHormone = defineHormone("counter", { count: 0 });

pureLit("some-element", (element) => {

const count = useState(element, 0)

useReceptor(countingHormone, async value => count.publish(value.count));

return html`<p>Receptor State: ${count.getState()}</p>`;

});

// some-element: <p>Receptor State: 0</p>

// release hormone

releaseHormone(countingHormone, (currentCount) => ({

count: currentCount + 1,

}));

// some-element: <p>Receptor State: 1</p>

In addition, a hypothalamus allows you to orchestrate hormone cascades for more complex scenarios. Take team order management in our shopping cart example - what they need to do is wait for all the previous steps to be complete and the “complete checkout” button to be pressed. With Organismus, we can define hormones for each process and let the hypothalamus trigger the final step once everything is done:

// defined by the teams

const profile = defineHormone<ProfileReference>("profile");

const payment = defineHormone<PaymentReference>("payment");

const orderNow = defineHormone("orderNow");

// in order management

// the hypothalamus will only be triggered once all hormones are released

hypothalamus.on([profile, payment, orderNow], (result) => {

console.log("Profile Reference:", result.profile);

console.log("Payment Reference:", result.payment);

});

Such a setup is distributed enough to give the teams flexibility while introducing some “infrastructure” that helps the teams to align their business processes. As it was true with framesets, you still want to limit the amount of passed (and thus shared) data between the teams. Rather than passing around the full profile data, the profile team should only provide some reference. It’s their responsibility to make sure that the data is available and persisted once the checkout is complete, which they can, by adding a receptor for the orderNow hormone.

Into the future

Looking back, since I started with web development more than 20 years back, there have been some developments. Lessons we learned in the early days, to pass not much data between different fragments of our page, and have slices in our software that align with what we are showing to the user, haven’t changed.

There are things we had with frames we haven’t fully achieved with the mechanisms I have shared so far, though. Especially the strong encapsulation that frames gave us.

There is a web standard that helps us with, though, and will also provide us with a few benefits for which we needed frameworks before.

Web Components

It’s slightly ironic to speak about Web Components in a chapter that’s called “Into the future”, given web components were introduced in 2011. It took some time for them to take off, but lately, they have gained some traction.

Web Components are actually not a single technology but a collection of multiple specifications that together provide the foundation for encapsulation as strong as with frames, but additionally, they provide the mechanism needed to create applications that run as a single application in the browser.

Custom Elements

Firstly, it allows the creation of custom elements. Custom elements are similar to the framework components we saw above, but unlike them, they don’t need a framework to be rendered; the browser will take care of that.

You can create a custom element by instanciating a class that inherits from an HTMLElement, register it in the browser using customElements.define and then reference it in our html element via the created element:

The magical thing about it is that we could have put the script in a file as well, deployed it to a CDN, reference it and achieved the same as with Single-spa, but only using what the browser gives us. And custom elements is only the first part of the specification.

Shadow DOM

The second feature it gives us is Shadow DOM. This functionality allows us to place elements in a separate DOM, thus creating a stronger encapsulation. Why is this important? Think about sharing our button component with another team, and this team has a global style that hides all button elements:

button { display: none; }

In the component we shared, our button is gone. Now, usually, teams don’t randomly hide buttons. But they may have styles that collide with yours or have some things that could collide with yours. An example of things that can go wrong was an incident that happened in one of my previous teams when one team tried to add a check to their form to avoid people would accidentally close the page. The selector for the was applied globally on every a-tag, though, and on every page, whenever a user tried to click a link, a popup would come up notifying you of potentially unsaved changes.

With Shadow DOM, you can completely avoid any of those issues simply by changing the line where we add the button to the document from this.appendChild(button); to this.attachShadow({mode: 'open'}).appendChild(button).

Now our button is in a hidden DOM that cannot be accidentally being accessed from the outside.

Templates and Slots

And last, there are templates and slots. They allow you to create HTML markup that you can use to create the rendered page. In our shopping cart button example, we can use them to create a nicer button and allow the outside world to provide the text like so:

<template id="my-button">

<style>

button {

background-color: #ccc;

padding: 5px;

cursor: pointer;

}

</style>

<button>

<slot>🛒</slot>

</button>

</template>

In our code we no longer need to create the button manually, but we can access the template and use it instead:

class Button extends HTMLElement {

constructor() {

super();

- const button = document.createElement("button");

- button.innerText = "🛒";

- this.attachShadow({mode: 'open'}).appendChild(button)

+ const template = document.getElementById('my-button').content;

+ this.attachShadow({mode: 'open'})

+ .appendChild(template.cloneNode(true))

}

}

In addition, the slot will allow users of the component to change the text displayed in the button.

Adding convenience

Having web components removes the need to use frameworks; however, unlike with frameworks, the developer experience is not that great when creating custom elements, shadow dom and templates. A lot of plumbing has to be done, and things like state management, passing complex elements as attributes, and managing your registered elements can take a lot of time.

Luckily, there are frameworks that can help you with that. The one I consider strongest at the moment is LitElement (formerly known as Polymer), which makes writing web components straightforward. On top of it, I use my own pureLit to have functional components with hooks.

Above’s button can be rewritten to this snippet:

pureLit("add-to-cart", () =>

html`<button>

<slot>🛒</slot>

</button>`

, {

styles: css`

button {

background-color: #ccc;

padding: 5px;

cursor: pointer;

}`

})

For a stateful example we can look at a counter, that takes the initial value from an attribute:

pureLit("simple-counter", (el) => {

const state = useState(el, el.value)

return html`

<div>Current Count: ${state.getState()}</div>

<button @click=${() => state.publish(state.getState() + 1)}>

<slot>Increment</slot>

</button>`

}, {

defaults: {

value: 0

}

})

Which we can use as

<simple-counter value="100"></simple-counter>

When combining this with systemjs modules, we can create modules that have all the conveniences from dependency injection while allowing us to easily include them during runtime. Our frame could then look like this:

<!DOCTYPE html>

<html>

<head>

<title>Example Product Details Frame</title>

<script type="module" src="https://cdn.example.com/shopping-cart.js"></script>

<script type="module" src="https://cdn.example.com/navigation.js"></script>

<script type="module" src="https://cdn.example.com/product.js"></script>

</head>

<body>

<menu-top>

<shopping-cart slot="pull-right"></shopping-cart>

</menu-top>

<product-detail>

<shopping-cart-button-add slot="call-to-action"></shopping-cart-button-add>

</product-detail>

<menu-footer></menu-footer>

</body>

</html>

And whenever the shopping cart team deploys a new version, it will be used without being required to align with the others.

In this scenario, we can still use the import maps we saw in Single-spa to further reduce our package sizes and provide LitElement and pureLit as global dependencies:

<!DOCTYPE html>

<html>

<head>

<title>Example Product Details Frame</title>

<script type="systemjs-importmap">

{

"imports": {

"lit-element": "https://cdn.jsdelivr.net/npm/lit-element@2.4.0/lit-element.min.js",

"pure-lit": "https://cdn.jsdelivr.net/npm/pure-lit@0.3.3/dist/index.min.js",

"@my-website/shopping-cart": "https://cdn.example.com/shopping-cart.js",

"@my-website/menu": "https://cdn.example.com/navigation.js",

"@my-website/product": "https://cdn.example.com/product.js",

}

}

</script>

</head>

<body>

<menu-top>

<shopping-cart slot="pull-right"></shopping-cart>

</menu-top>

<product-detail>

<shopping-cart-button-add slot="call-to-action"></shopping-cart-button-add>

</product-detail>

<menu-footer></menu-footer>

</body>

</html>

Import maps are not a standard yet, but once they will, the browser will take care of everything for us, and no framework has to be involved in keeping the DOM in sync or our microfrontends connected.

Interlude: The case of the missing contract tests

Creating components and decoupling them enables teams to be more independent. This means, however, that the changes you as a team don’t observe will become more, and it will be interesting for you if someone breaks you or if you break somebody else. Ideally, you’d even know before you break them.

In the backend, for APIs, this is sometimes done via contract tests. Those tests are between mocked tests and integration tests. Similar to mocked tests with recordings, the consumer (the participant that uses an API) creates a recording of the expected API call and result. This recording is then shared with the provider (the participant owning the API), and they can run a test against their own API to check if they fulfil the expected result.

More importantly, with every change they add to the API, they automatically check they won’t break the expectation of the consumer, and if they do, they can also see how and why. The information the failing test provides they can use to talk to the consumer, informing them about the change and the impact.

The issue is similar in the frontend. Unlike with APIs in the backend, we have properties that control what we request from the components and the view and events we expect from them.

Let’s take our shopping cart button as an example. As Team Product, we have certain expectations for this button, mainly that it adds the right product to the shopping cart. We would probably formulate our assumptions like this:

GIVEN we have the shopping-cart-button-add component

AND we pass an article id as parameter `id`

WHEN this component is added to the page

THEN there is a button that can be used to add something to the cart

GIVEN we have the shopping-cart-button-add component

AND we pass the article id "1234" as parameter `id`

WHEN a user clicks the button

THEN the product "1234" should be added to the cart

This works in the beginning, but as the product grows, more requirements are popping up, and the Shopping Cart team needs more information to place the item into the cart in a correct way. There might be optional campaigns that would have an impact on the price, so they need the campaignId as well and decide to refactor the id property to articleId. They make sure that for a time both variants work, tell the Team Product, developers there make the adjustments, and the Team Shopping-Cart removes the id. A few hours after the deploy the change, a very stressed PO from Team Proposal calls complaining that the team broke the functionality of their “people also bought”-boxes.

What happened? Back in the days, this box belonged to the Team Product, and they just added the button as they knew how it works. As the proposal area got more sophisticated, a new team was created to take care of it, and they inherited the button. The shopping cart team wasn’t aware that this team existed, nor that they use their button, so they didn’t think of notifying them.

Contract-based tests in the backend would help in that case, as the proposal team would have maintained tests verifying this behaviour, and when the change would have been pushed by the Shopping Cart Team, the tests would fail, and the new button wouldn’t be deployed.

This is only one example. As your components grow and are used at more and more locations on the page, understanding how they are used and what expectations are coming with them is imperative. And almost impossible at the moment, as I’m not aware of any framework that implements this use case currently for runtime integration. For build time integration, you can simply run unit tests to catch those scenarios. This is an impediment to making releases of runtime integrated components as safe and controlled as we are when releasing APIs.

To show the basic idea for how this I created an example repository doing something similar via jest and dom-testing-tools (http://github.com/MatthiasKainer/contracts4components).

The consumer creates their definition on how they want to use the component, execute with a test function like so:

await test(

container, // the container under test

definition, // the definition to execute

path.join(__dirname, "..") // the location where to put the contract

)

This will create a file that can be run in a test by the provider using their component like this:

render(myComponent)

// this runs the tests defined in the definition

require("./some.contract").expectedContract()

Following this idea, the workflow for the contracts look like this:

┌─────────────┐ ┌─────────────┐ ┌──────────────┐

│ │ │ │ │ │

│ Consumer │ │ Broker │ │ Provider │

│ │ │ │ │ │

└─────┬───────┘ └──────┬──────┘ └───────┬──────┘

│ │ │

┌───────────┤ │ │

│ │ │ │

│create │ │ │

│green │ │ │

│test │ │ │

└───────────► mock │ │

│ component │ │

│ │ │

│ share │ │

├─────────────────► │

│ mock │ │

│ │ receive mock │

│ ├─────────────────►

│ │ │

│ │ ├────────────┐

│ ◄─────────────────┤ │

│ │ test red │ implement │

│ ◄─────────────────┤ component │

│ │ │ │

│ │ ◄────────────┘

│ │ test green │

│ ◄─────────────────┤

│ │ │

│ │ │

Setting up those tests can make your component sharing much more controlled, as changes by the providers will be detected early (and at the provider side), and larger changes can be communicated to all consumers of your services and rolled out in a very controlled way.

Module Federation

As microfrontends grow, dependency management will get really difficult. The goal will be to minimise payload by not loading shared dependencies multiple times, but some teams will be slower, others quicker in updating their versions. With decoupled deployments, making sure you load the same dependency as little as possible but as often as needed, and providing it to the component, is a field of science on its own.

Not too long ago, webpack came to the rescue with a feature that helps tremendously there: Module Federation.

Module federation allows you to specify local and remote modules and expose the remote ones via a container. That might be slightly difficult to understand, so let’s take a simple example.

Dates in javascript are challenging, so people use libraries. One I have worked with a few times already is moment. It’s no longer the leading choice for most new projects, as it has been moved more into an operating model, but you will still find it in a lot of projects.

And it adds a considerable footprint to your page.

Now with systemjs-importmaps, we can specify one version for everyone. But what if not everyone wants to use the new version?

Let’s build up our example.

There was a change between versions 2.9.0 and 2.10.0 that modified the behaviour when comparing empty dates.

// 2.9.0

expect(moment("", "DD-MM-YYYY").isBefore(moment("2021-01-01"))).toBeTrue()

// 2.10.0

expect(moment("", "DD-MM-YYYY").isBefore(moment("2021-01-01"))).toBeFalse()

For most teams, this isn’t an issue, as they were never assuming this a legit case. However, one team used the empty string for precisely that case, and they would have to invest some effort to change that, which they don’t have time for at the current time.

This scenario is one of the cases where module federation will come to the rescue. Let’s take a look at the project setup for the teams.

All of them are defining their moment.js version in their package.json, like this:

{

"name": "@my-website/product-team",

"version": "0.0.0",

"private": true,

"dependencies": {

"moment": "^2.0.0"

}

For this team, that means they will automatically accept every moment version from >= 2.0.0 to < 3.0.0. In webpack, you can now configure this dependency as a remote module like so:

module.exports = {

entry: "./src/index",

mode: "development",

// ...

plugins: [

new ModuleFederationPlugin({

name: "product-team",

// ...

shared: {

// adds moment as shared module

// version is inferred from package.json

// it will use the highest moment version that is >= 2 and < 3

moment: require("./package.json").dependencies.moment,

},

}),

],

};

The first time a module is loaded with a shared dependency on moment, webpack will load the dependency from the remote that hosts the microfrontend.

Every other microfrontend that comes after and has the dependency on a version of moment that matches the one already loaded will reuse the available. To avoid being updated, all the team has to do is pinning their version on 2.9.0 like so:

{

"name": "@my-website/shop-team",

"version": "0.0.0",

"private": true,

"dependencies": {

"moment": "2.9.0"

}

This will allow this team to use the version they need, while the others could use the newest one. Could, because there is an exciting race condition going on now.

If the microfrontend for the @my-website/product-team is loaded first, the product team will be loading version a high version like 2.29.1 of the dependency, and the shop team will then load 2.9.0. If the microfrontend for @my-website/shop-team is loaded first, 2.9.0 will be the version that the product team will now be given as well, as their version range includes it.

This shows that there are still dragons around, and we are not yet at a point where microfrontends are 100% predictable. But we have come a long way since the original iframes, and every year the technology is becoming more mature.

I can only dream about where we will be in 20 years and how we will be building applications for the web.